At a Glance

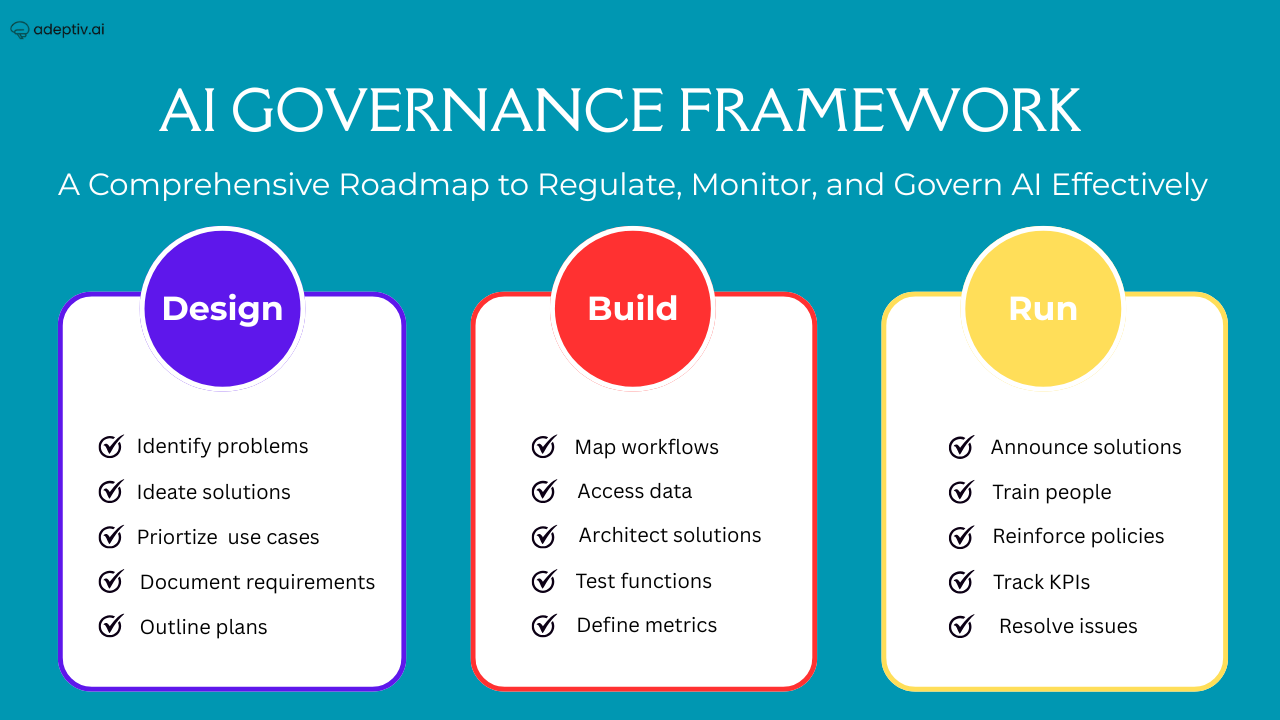

- A robust AI Governance Framework covers the full lifecycle: from planning and design through data operations, model development, deployment and ongoing monitoring.

- Core components include algorithm governance, data governance, risk & impact assessment, compliance management, and accountability structures.

- Standards like EU AI Act, ISO/IEC 42001, and frameworks like NIST AI Risk Management Framework (AI RMF) provide regulatory alignment and global best practices.

- Effective governance requires cross-functional coordination across teams (data, engineering, compliance, legal), clear ownership, and continuous lifecycle monitoring.

- A well-structured governance framework is not a burden — it is an enabler of trust, innovation, compliance readiness, and long-term scalability for AI initiatives.

Introduction

In an era where artificial intelligence is rapidly transforming business operations, consumer experiences, and societal interactions, managing AI responsibly is no longer optional — it is imperative. AI systems, if unmanaged, carry risks: bias, unfairness, privacy violations, operational instability, regulatory non-compliance, and reputational damage. For enterprises deploying AI at scale, a structured AI Governance Framework is the critical foundation to ensure AI delivers value reliably, ethically, and legally. This article explores a comprehensive governance blueprint, explains its components, and shows how organizations can operationalize governance across their AI lifecycle.

1. What Is an AI Governance Framework?

AI governance refers to the policies, standards, and practices that ensure AI systems operate safely, ethically, and in compliance with applicable laws and societal expectations.

A well-designed framework provides guardrails across the lifecycle of AI — from design and development to deployment, monitoring, and retirement — aligning enterprise objectives with risk management, compliance, and ethical standards. The framework functions as a structured management system that enables trustworthy, auditable, and responsible AI at scale.

2. Why AI Governance Frameworks Are Critical Today

2.1 The Risk Landscape Has Intensified

- AI is increasingly used in high-impact domains — healthcare, finance, recruitment, compliance — where failures cause real human or regulatory harm.

- Regulatory regimes are emerging worldwide. The EU’s AI Act, for example, mandates compliance controls, risk assessments, transparency, and accountability for high-risk systems.

- Standards such as ISO/IEC 42001 offer globally recognized criteria for AI risk management and governance.

- Without governance, AI-driven systems risk bias, unintended consequences, data misuse, lack of traceability, and potential legal, ethical, and reputational fallout.

2.2 Governance as a Strategic Enabler

When implemented well, governance offers benefits beyond compliance:

- Trust & Transparency: Stakeholders — customers, regulators, partners — gain confidence when AI decisions are explainable and auditable.

- Sustainable Innovation: Clear governance enables scaling AI responsibly without compromising ethics or compliance.

- Risk Mitigation: Early risk identification and monitoring reduce unexpected failures and regulatory exposure.

- Competitive Differentiation: Companies that embed governance become leaders in ethical, enterprise-grade AI deployment.

3. Core Components of a Comprehensive AI Governance Framework

A robust AI governance framework comprises multiple interlocking domains. Below are the key components:

3.1 Governance Lifecycle & Structure

Governance must align with the full AI lifecycle: from strategy and planning through data operations, model development, deployment, maintenance, and retirement. Lifecycle governance prevents fragmented oversight and ensures continuity.

It demands a clear organizational structure with defined roles and responsibilities — including data officers, compliance leads, AI ethics leads, engineering, security, and legal — to avoid siloed governance efforts.

3.2 Data Governance & Data Operations

Good AI starts with good data. Data governance plays a foundational role: data lineage, provenance tracking, access control, quality monitoring, privacy protection, encryption, and secure data operations ensure that AI training and inference are based on lawful, high-quality, and ethically managed data inputs.

3.3 Algorithm & Model Governance

Algorithm governance defines how models are built, validated, tested, and documented. It demands transparency, explainability, interpretability, reproducibility, version control, and rigorous testing — including fairness audits, robustness tests, bias mitigation, and stress testing.

3.4 Risk Assessment & Impact Management

Each AI system carries context-specific risks — from social or financial harm to privacy or compliance failure. A governance framework must include structured risk identification, impact assessment, classification (e.g. low, medium, high risk), and mitigation strategies. For high-risk systems, more stringent processes such as human oversight, manual reviews, and continuous monitoring are required.

3.5 Compliance, Regulatory Alignment & Standards

Regulations like the EU AI Act, and standards like ISO/IEC 42001 and NIST AI RMF provide the legal and normative foundation for governance. Compliance means aligning model risk classification, documentation, audit trails, reporting, and transparency to regulation. Governance frameworks should map to these regulations from day zero to ensure readiness and avoid retroactive fixes.

3.6 Accountability, Ownership & Organizational Responsibility

Accountability underpins governance. Frameworks must define ownership of AI systems, responsibilities for decisions, human oversight points, and fallback procedures. Governance must include incident-response plans, redress mechanisms, documentation, audit logs, and transparency around AI decision processes.

3.7 Monitoring, Logging & Lifecycle Operations

Real-world AI is dynamic. Continuous monitoring for drift, bias, performance degradation, data changes, model confidentiality risks, and security vulnerabilities is essential. Logging, auditing, periodic review and maintenance are core to lifecycle operations.

4. How Enterprises Should Build & Implement Their AI Governance Framework

A phased, top-down yet iterative approach works best:

- Strategic Planning & Governance Charter — Begin with defining organizational AI strategy, risk appetite, ethical principles, compliance posture, and governance ownership.

- Inventory & Discovery — Map all existing AI systems, data pipelines, datasets, and decision workflows. Maintain a registry of models, data sources, use cases.

- Risk Classification & Prioritization — Assess each AI system’s risk: regulatory, ethical, operational, societal. Classify and prioritize based on impact, exposure, and business criticality.

- Policy Definition & Control Implementation — Define internal policies (ethical use, data handling, transparency, human oversight), align with external standards (ISO 42001, AI Act), and build technical/legal controls around them.

- Implementation (Data + Model + Deployment) — Build or onboard models with compliance infrastructure: version control, documentation, bias/fairness testing, logging, audit trails, human review, security controls.

- Continuous Monitoring & Operational Assurance — Deploy monitoring for drift, fairness, performance, compliance. Maintain logs, incident handling procedures, update policies, and revalidate models periodically.

- Audit & Compliance Review — Periodic external/internal audits, documentation readiness for regulators, readiness to produce model lineage, risk assessments, fairness reports, and compliance evidence.

- Governance Maturation & Culture Building — Embed governance into organizational DNA: train teams, define governance roles, integrate into dev-ops processes, remain vigilant to evolving regulations and ethical standards.

5. Benefits of Implementing a Robust AI Governance Framework

A well-structured AI Governance Framework is not merely a compliance obligation; it is an enterprise capability that delivers durable competitive advantage. Organizations that operationalize governance experience measurable and strategic benefits across technology, brand equity, financial performance, and regulatory resilience.

5.1 Strengthened Trust and Digital Reputation

In an environment where AI decisions influence formal approvals, financial recommendations, hiring outcomes, and health-related decisions, enterprises must demonstrate fairness, clarity and traceability. Governance enhances organizational trustworthiness by enabling auditable records, explainable model outcomes, transparency of decision paths, and structured evidence of risk treatment. This strengthens confidence among regulators, customers, institutional investors and corporate partners.

5.2 Faster and More Confident Scaling of AI Initiatives

Without governance, scaling AI becomes risky — errors propagate faster, unseen model drift accumulates, and compliance gaps remain hidden. Governance frameworks accelerate adoption by making AI deployable at scale with formal readiness checks, validated documentation, and production monitoring pipelines. With governance embedded at design time, teams avoid last-minute rework, prevent compliance conflicts, and obtain faster sign-offs from risk and legal stakeholders.

5.3 Lower Operational Risk Through Continuous Observability

Governance transforms AI from “set-and-forget models” to systems continuously evaluated across stability, accuracy, fairness, data validity and security integrity. Drift detection, incident escalation mechanisms, resilience testing, and periodic audits mitigate failures early—reducing the cost of remediation and protecting users from unintended impacts. This structural reduction in uncertainty leads to measurable operational continuity and system reliability.

5.4 Improved Regulatory Preparedness and Evidence-Based Compliance

Global regulations such as the EU AI Act, ISO/IEC 42001, and NIST AI RMF demand traceability, documentation, risk categorization, incident reporting, and human-oversight structures. A governance framework ensures enterprises meet these obligations proactively, rather than reactively after launch. Organizations maintain:

- Evidence logs

- Compliance inventories

- Explainability reports

- Data provenance records

- Scheduled review trails

This makes regulatory audits significantly faster, less costly, and more defensible.

5.5 Cross-Functional Alignment and Accountability

Governance introduces structural clarity: who owns decisions, who validates risk, and who approves deployment changes. When roles, escalation paths, and review points are formalized, ambiguity is eliminated. Engineering, legal, compliance, data science and product teams collaborate under a unified lifecycle framework, reducing cross-departmental friction.

5.6 Product and Business Differentiation

Modern buyers, especially in enterprise procurement, increasingly evaluate AI vendors on governance maturity. Verified governance controls—such as auditability, fairness testing, reproducibility, real-time monitoring and human-override mechanisms—differentiate providers that deliver responsible innovation over those that simply deliver models. In markets where AI claims are commoditized, governance becomes the source of strategic defensibility.

5.7 Greater Protection of Customer Rights and Societal Impact

Governance makes AI not only safe in operational terms, but fair and equitable in societal outcomes. Through bias controls, access rules, transparent logic pathways and notification mechanisms, enterprises safeguard users from opaque decision-making, discrimination, or unconsented inference. As public expectations evolve, this protection is foundational to ethical technology stewardship.

6. The Bigger Context: Standards, Regulations & Industry Alignment

In 2025, a growing number of global frameworks and regulations give strategic significance to AI governance:

- The EU AI Act: Provides a risk-based regulatory architecture, classifying AI systems by risk and imposing requirements on high-risk systems — it is fast becoming a de facto global compliance reference standard.

- ISO/IEC 42001: A newly introduced standard for AI management systems, offering internationally recognized criteria for governance, risk management, and compliance across the AI lifecycle.

- NIST AI RMF (Risk Management Framework): A flexible, voluntary framework designed to help organizations structure governance, risk assessment, monitoring, and continuous assurance.

7. Conclusion – Governance as Strategic Infrastructure

AI is rewriting the fabric of enterprise operations, customer experience, and global systems. But with great capability comes equally great responsibility. An AI Governance Framework is the structural architecture that converts AI from a speculative asset into a trusted, compliant, and scalable capability.

For organizations that treat governance as a strategic infrastructure — not a compliance afterthought — AI becomes an engine of sustainable innovation, not a source of risk. Implementing a comprehensive framework ensures ethical AI, regulatory readiness, operational stability, and stakeholder trust.

At Adeptiv AI, we believe that governance is not a burden — it is the foundation on which ethical, scalable, and future-proof AI enterprises are built.

Conclusion — Turning Governance into Scalable Enterprise Infrastructure

As artificial intelligence becomes deeply intertwined with operational decision-making, regulatory accountability, and enterprise-wide digital transformation, organizations can no longer rely on informal oversight structures or fragmented risk controls. AI Governance Frameworks are now the strategic foundation that allows enterprises to innovate responsibly, scale confidently, and maintain institutional credibility in a regulatory environment that is evolving faster than ever.

A well-implemented governance framework ensures that every AI system is traceable, fair, compliant, and continuously observable across its full lifecycle. It enables leadership teams to demonstrate ethical accountability, adhere to global standards, satisfy regulatory audits, and deploy AI assets at scale without compromising safety or legality. More importantly, it transforms AI from a set of experimental capabilities into enterprise-grade systems with documented lineage, measurable risk profiles, human-oversight checkpoints, and verifiable assurance.

For organizations that view governance not as a constraint but as structural infrastructure, AI becomes sustainable, defensible, and trustworthy. It strengthens stakeholder confidence, institutional resilience, and long-term innovation potential. The enterprises that build AI governance today are the ones best positioned to lead tomorrow’s intelligent, regulated, and outcome-driven digital economy.

At Adeptiv AI, our mission is to operationalize this future—where governance is not reactive, but anticipatory; not manual, but automated; and not peripheral, but central to how AI is designed, deployed, and managed at enterprise scale.

FAQs

Q1: What is the difference between AI Governance and AI Compliance?

AI Governance is a broader concept covering policies, ethics, data integrity, risk management, and lifecycle oversight. AI Compliance is a subset focused on meeting regulatory and legal obligations. Governance ensures compliance — but also ensures ethical, transparent, and responsible AI operations.

Q2: Does adherence to ISO/IEC 42001 guarantee regulatory compliance?

Not automatically — ISO 42001 provides best-practice standards for AI management systems, but compliance with local regulations (e.g. EU AI Act) may require additional measures. ISO 42001 helps align internal practices with international standards and simplifies regulatory readiness.

Q3: How often should organizations reassess their AI governance framework?

Governance should be dynamic. A good practice is to reassess at least annually — and also whenever new AI systems are added, regulations change, or risk profiles evolve. Continuous monitoring and lifecycle management are essential.

Q4: Who should lead governance efforts inside an organization?

Governance should be multi-disciplinary — involving legal, compliance, data science, security, engineering, and executive leadership. Often a dedicated governance office or Chief AI Governance Officer (or equivalent) ensures accountability and oversight.

Q5: Is AI governance only relevant for large enterprises?

No. While larger enterprises may face higher regulatory and operational risk, even small and medium organizations benefit from governance — especially when using third-party AI services or handling personal data. Good governance builds trust, reduces liability, and enables scalable, compliant AI adoption.