Hiring the perfect candidate has always been tricky. The fast-moving business world has made it even harder. Companies now use artificial intelligence tools to make better, quicker, and smarter hiring choices with a growing focus on ethical AI to ensure fairness and transparency. Many jump in, but they often fail to look at how these complex systems work.

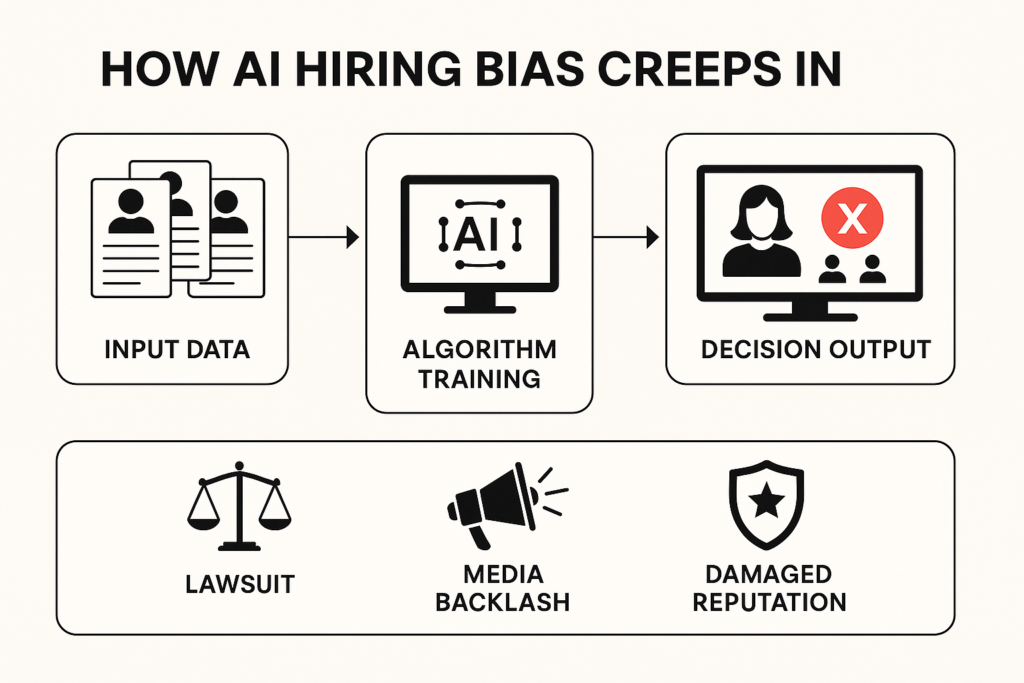

The big issue is that many recruitment algorithms fail to be as fair or unbiased as people assume. When these tools mess up, the fallout goes beyond public embarrassment. Companies can face lawsuits that waste both time and money, regulators digging deep into how hiring is done, and broken trust within the workplace that can take ages to fix.

At a Glance

- Discover why recruitment algorithms can embed bias and legal risk despite appearing neutral.

- Learn how global laws (US, EU, India) are holding AI-powered hiring tools accountable.

- Examine real-world cases where biased hiring AI caused litigation or reputational damage.

- Get actionable steps to embed Responsible AI and solid AI Governance Frameworks into your recruitment process.

- Find out why integrating policy, transparency, accountability, and monitoring is essential for AI Compliance.

Let’s dive into this important subject further.

Why recruitment algorithms sometimes show bias and lead to unfair hiring practices

AI tools in hiring often seem efficient and modern, but they carry hidden risks. These systems learn from historical data — and if that data reflects past human biases (for example, fewer women in leadership roles), the algorithm can replicate them.

Additionally, design oversights (skipping fairness checks), improper deployment contexts, or lack of human oversight can allow bias to creep in.

For example, an open-source study found that resume-screening AI systems favored men in higher-wage roles, replicating occupational gender stereotypes. arXiv+1

Without a strong AI governance & ethical AI layer, hiring systems may unintentionally discriminate — and that’s where legal and reputational risks emerge.

How laws address the use of AI in hiring (yes, AI must follow rules too!)

In many jurisdictions, using AI in hiring is no longer a grey area — it’s regulated.

- United States: Automated hiring systems can trigger anti-discrimination laws (Title VII) or wage-transparency statutes where job postings fail to disclose salary ranges.

- European Union: The upcoming EU AI Act classifies hiring-related AI as “high-risk.” Explaining decisions, documenting training data, and providing human review are mandatory for compliance.

- State laws in the US: Colorado’s SB 205 (effective Feb 2026) deems AI hiring systems as “high-risk” and mandates disclosures about their use and impact.

Failing to comply can lead to fines (up to 7% of global turnover under the EU AI Act) and lawsuits. When your AI-driven hiring process lacks transparency or accountability, the risk becomes real.

True stories where hiring algorithms caused issues and led to real challenges

1. Amazon’s Hidden AI Bias Controversy

Amazon reportedly scrapped an AI-powered recruiting tool that favoured male candidates, specifically penalizing resumes with terms like “women’s chess club.” While no lawsuit resulted, the reputational fallout was heavy.

2. HireVue vs Illinois

Illinois regulators scrutinised HireVue’s video-interview AI under the state’s AI Video Interview Act. Despite HireVue’s claims of fairness, the investigation raised red flags for HR-tech vendors and buyers.

3. AI in Hiring Legal Cases

A legal review highlights that AI systems which screen candidates based on historic data or unknown criteria can violate non-discrimination laws — employers, not vendors, bear full liability.

These examples underline the message: technology may be innovative, but governance and ethical AI oversight matter even more.

Ways ethical AI can improve hiring processes to be fair, effective, and compliant

1. Fairness: Avoid Using Flawed Data

Ensure training data is representative, current, and free of legacy bias. Use regular bias audits and diverse dataset reviews.

2. Transparency: Break Open the Black Box

Inform candidates if AI is involved, disclose key criteria, provide meaningful human review options. GDPR & Local Law 144 (NYC) emphasise this.

3. Accountability: Humans in the Loop

Always assign human oversight for critical hiring decisions. AI should assist, not replace, human judgment.

4. Privacy & Security: Protect Candidate Data

Limit collection of sensitive attributes, anonymise data, obtain consent, and ensure vendor compliance.

5. Reliability & Compliance Monitoring

Deploy continuous monitoring, logging, and audit trails. Map these to a strong AI governance framework, aligning with standards like ISO 42001 or NIST AI RMF.

By embedding ethical practices into hiring algorithms — and supporting them with structural governance — organizations can reduce risk, trust their process, and attract inclusive talent.

Why integrating ethical AI and governance matters for your business

Ignoring ethical AI or governance isn’t just risky — it’s costly. Biased AI hiring systems can lead to lawsuits, regulatory fines, and lost brand value.

On the flip side, companies that adopt Responsible AI and combined AI governance frameworks enjoy higher trust, smoother audits, and stronger employer brands.

As talent markets remain competitive (AI leadership roles are up 40-60% in FY25) The Economic Times your hiring process becomes a key differentiator — if it’s fair, transparent, and compliant.

Conclusion

Using AI for hiring isn’t inherently harmful — but if you proceed without Responsible AI and a formal AI governance & ethical AI infrastructure, you open yourself up to regulatory, legal, and reputational damage. By pairing ethical practices with robust governance, you turn hiring AI into a competitive advantage instead of a liability.

Protect your process, protect your brand, and protect your people — build fairness, transparency, and accountability into your AI hiring today.

FAQs

Q1: What’s the difference between Responsible AI and AI Governance?

Responsible AI focuses on ethical AI principles (fairness, transparency, accountability). AI Governance refers to the structures, processes, and controls that implement those principles across an organization.

Q2: Do I need to document every hiring algorithm?

Yes. Legal guidance suggests organizations maintain records of training data, decision-logic, bias testing, human oversight, and candidate outcomes. Greenberg Traurig+1

Q3: If I use a third-party hiring tool, who is liable?

Primarily, the employer. Even when using vendors, you must disclose usage, ensure fairness audits, and hold vendor contracts accountable. HCAMag+1

Q4: Can AI be used in hiring safely?

Absolutely — when supported by ethical design, human oversight, transparency, proper governance, and continuous monitoring.

Q5: What immediate steps should I take to improve hiring AI compliance?

Start with an algorithm inventory, conduct a bias audit, engage legal review, ensure disclosures to candidates, build human review paths, and map your controls to an AI governance framework.