At a Glance

- Why ethical AI governance is the cornerstone of business trust and resilience.

- The new era of AI compliance: EU AI Act, NIST AI RMF, and ISO 42001.

- Real-world frameworks from Microsoft, SAP, and UNESCO shaping global standards.

- The 7-step blueprint to build a resilient AI governance framework.

- How Responsible AI drives innovation, mitigates risk, and builds stakeholder trust.

The Executive Imperative: Governing AI Before It Governs You

AI is now the defining force of competitive advantage — and corporate accountability.

Yet as enterprises rush to scale AI systems, many underestimate a critical truth: without ethical AI governance, innovation becomes a liability.

From biased algorithms that damage reputation to opaque models that violate privacy laws, the risks are no longer hypothetical. In 2025, the cost of non-compliance is growing exponentially — driven by new global regulations such as the EU AI Act, California’s AI Accountability Bill, and the forthcoming US AI Safety Institute Framework.

In this new environment, Ethical AI governance is not just a compliance function — it’s a strategic differentiator. It defines how responsibly, transparently, and sustainably your organization innovates with AI.

Why Ethical AI Governance Matters More Than Ever

AI governance ensures that AI systems are transparent, accountable, safe, and fair throughout their lifecycle. It aligns data practices, model development, and deployment decisions with ethical and legal standards.

But it’s also a trust engine — the mechanism by which boards, investors, customers, and regulators can believe in your AI.

- Trust builds brand equity and loyalty.

- Transparency reduces regulatory and reputational risk.

- Accountability creates a culture of responsibility across teams.

According to a 2024 Deloitte survey, 78% of executives believe that ethical AI governance practices directly influence brand trust, and 64% say that AI governance has become a board-level priority.

Global Momentum: Ethics and AI Regulation Converge

Governments and global institutions are accelerating regulation to ensure AI is safe, transparent, and aligned with human values.

- EU AI Act: The world’s first comprehensive AI regulation classifying AI by risk category. Non-compliance penalties can reach €35 million or 7% of global revenue.

- California SB 1047 (AI Accountability Act): Requires companies to ensure high-risk AI models are auditable and do not cause foreseeable harm.

- NIST AI Risk Management Framework (AI RMF): Establishes governance principles around transparency, reliability, and accountability.

- ISO/IEC 42001:2023: A global AI management system standard that operationalizes Responsible AI governance.

- UNESCO’s Recommendation on the Ethics of Artificial Intelligence (2021): The first global framework emphasizing human rights, fairness, and environmental sustainability in AI design.

The message is clear: AI governance and ethics are no longer optional — they are the license to operate in the AI economy.

Learning from Leaders: How Industry Giants Embed AI Ethics

The world’s most innovative companies understand that AI ethics equals business resilience.

- Microsoft’s Responsible AI Framework

Microsoft operationalizes governance through its “Responsible AI Standard” — embedding fairness, inclusivity, and explainability into every product cycle. Its Office of Responsible AI sets clear accountability chains, ensuring all models meet compliance and ethical review before deployment. - SAP’s AI Ethics Principles

SAP’s governance model focuses on “human-centered AI,” guided by principles like transparency, privacy, and sustainability. It established an AI Ethics Steering Committee to assess risk and align technology development with social responsibility objectives. - UNESCO’s Ethics Recommendation

UNESCO’s global guideline — adopted by 193 countries — emphasizes that AI must serve humanity’s collective welfare, prioritizing environmental, cultural, and societal well-being. It’s an ethics-first lens that enterprises are increasingly aligning with.

These examples highlight one truth: governance frameworks don’t slow innovation — they enable responsible innovation.

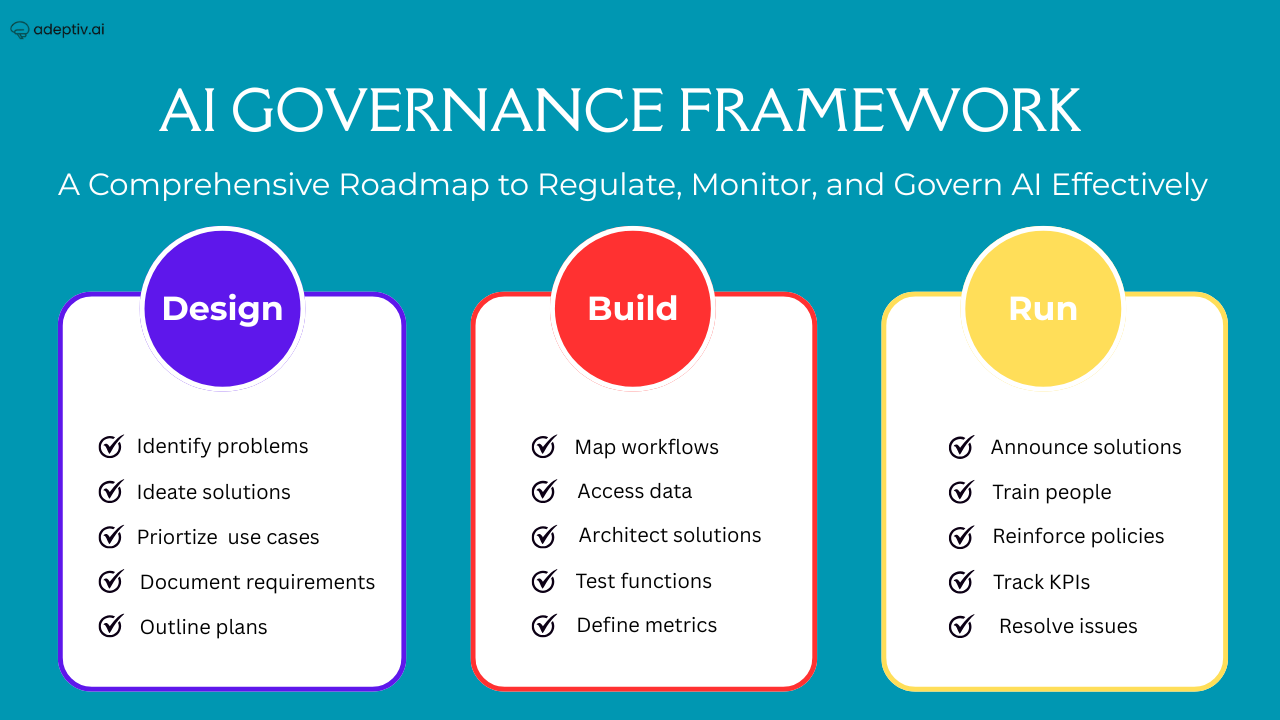

Building a Resilient AI Governance Framework

To defend against ethical, legal, and operational risks, organizations need structured governance.

Below is a seven-step blueprint to integrate Ethical AI governance throughout your AI lifecycle.

1. AI Inventory & Risk Classification

Catalog every AI system in use — including shadow AI developed outside formal governance.

Classify systems by impact risk (low, medium, high) using dimensions like data sensitivity, automation level, and potential societal harm.

This creates your foundational AI map — essential for oversight and compliance tracking.

2. Ethical Principles & Policy Layer

Define your Responsible AI principles — fairness, transparency, accountability, security, and human oversight.

Translate them into enforceable internal policies.

This policy layer acts as organizational guardrails, ensuring ethical consistency across all teams and vendors.

3. Technical Controls & Safeguards

Embed ethics in code through explainability tools, bias detection tests, differential privacy, and human-in-the-loop checkpoints.

Establish clear model documentation (e.g., model cards, data lineage) for auditability.

This ensures governance is not just theoretical — but measurable and testable.

4. Monitoring, Logging & Audit Trails

Operational transparency depends on robust monitoring.

Implement continuous performance tracking, model drift alerts, version control, and automated audit trails.

When something goes wrong, every decision and input must be traceable and reproducible.

5. Governance Bodies & Accountability

Create a cross-functional AI ethics committee or AI steering board.

Include experts from legal, data science, risk, HR, and external advisors.

Define who is accountable for AI outcomes — and how accountability is enforced.

As seen in Microsoft and SAP, this cross-discipline model drives both compliance and cultural alignment.

6. External Audits & Compliance Mapping

Invite independent audits for objectivity and credibility.

Map your controls to standards like:

- NIST AI RMF

- ISO 42001

- EU AI Act risk categories

- OECD AI Principles

These audits strengthen trust with clients, investors, and regulators — while revealing governance gaps before they become crises.

7. Incident Response & Remediation Plans

AI systems will make mistakes — but how you respond defines your credibility.

Develop incident protocols for rollback, human override, stakeholder notification, and legal review.

Treat every incident as a learning opportunity — refining both your models and your governance process.

The Payoff: Responsible AI as a Competitive Edge

When implemented strategically, Ethical AI governance is not a constraint — it’s a catalyst.

Organizations that embed Responsible AI realize tangible business benefits:

- Faster compliance readiness under global laws like the EU AI Act.

- Reduced reputational risk through ethical safeguards and transparency.

- Higher customer trust — critical for sectors like finance, healthcare, and retail.

- Sustainable innovation, backed by data integrity and accountability.

A 2025 PwC report found that companies with mature AI governance frameworks experience 40% fewer AI-related incidents and 25% higher stakeholder trust ratings.

The Human Element: Ethics at the Core

Technology alone cannot ensure ethical AI governance — people and culture must lead.

Organizations should empower employees with AI ethics training, integrate ethical KPIs into leadership metrics, and celebrate responsible innovation.

Ethical decision-making must move from compliance checklists to organizational DNA.

As UNESCO’s global AI ethics report notes:

“AI should augment human capacity, not replace human judgment.”

Conclusion: Governance Is the Foundation of Trusted AI

In an era where every algorithm shapes human experience, AI governance and ethics define long-term resilience.

Regulators will keep tightening, but those who lead with transparency, fairness, and accountability will define the next decade of AI leadership.

Responsible AI isn’t just about avoiding fines — it’s about earning trust, enabling innovation, and building a sustainable digital future.

Adeptiv AI partners with organizations to design and operationalize end-to-end AI governance frameworks, aligning innovation with compliance and ethical excellence.

FAQs

1. What is AI governance?

AI governance is the framework of principles, policies, and processes that ensure AI systems are developed, deployed, and monitored responsibly, ethically, and in compliance with laws.

2. Why is Responsible AI important?

Responsible AI ensures fairness, accountability, transparency, and safety in AI systems — reducing bias, protecting privacy, and improving public trust.

3. How do frameworks like NIST AI RMF or ISO 42001 help?

They provide structured methods to assess, mitigate, and document AI risks, helping organizations demonstrate compliance and ethical integrity.

4. What are the key components of an AI governance framework?

AI inventory, ethical policies, technical controls, monitoring, governance bodies, audits, and incident response plans.

5. How does AI governance drive competitive advantage?

It minimizes legal exposure, builds consumer trust, and ensures sustainable innovation — making ethical AI governance a strategic differentiator in the marketplace.