AI isn’t just a shiny new add-on for businesses anymore – it’s at the heart of how a lot of industries operate. But with that kind of power comes a mountain of responsibility. Regulators aren’t just sitting on the sidelines; they’re stepping in with real force. We’re talking about the EU’s AI Act, OECD principles, even the most recent legislation of Texas Responsible AI Governance Act which means companies can’t afford to be sloppy about how they build, manage, and track their AI models anymore.

Looking ahead, organizations need to get serious about how they handle the AI model lifecycle. That means figuring out how to stay compliant, making sure everything’s transparent, and – maybe most important—actually getting reliable results from their AI. Otherwise, well, the fines and headaches are coming.

What is AI Model Lifecycle Management

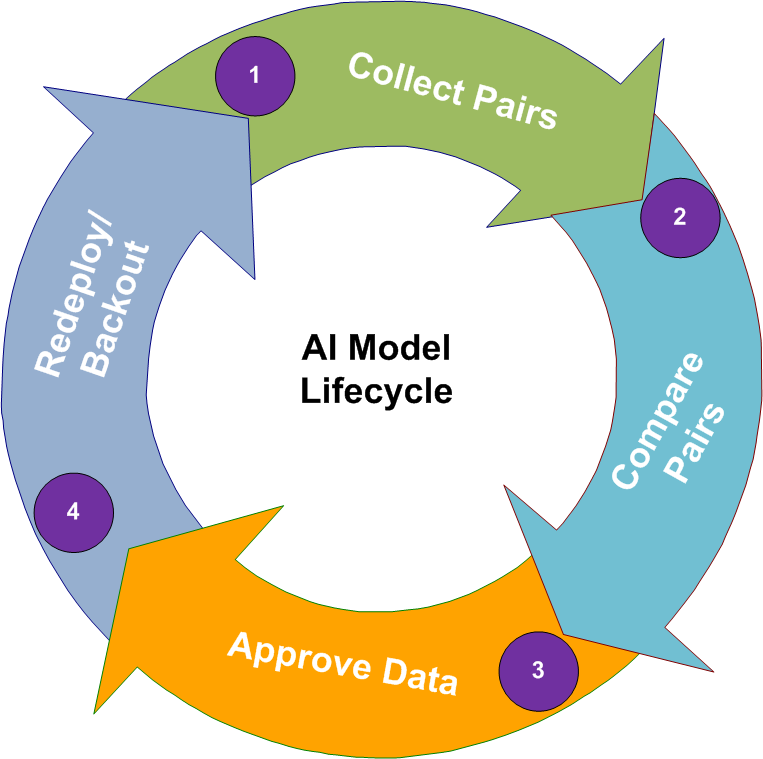

AI Model Lifecycle Management is really about steering your AI project from that first brainstorming session all the way to retirement—no shortcuts. It kicks off with strategy and design, then moves to gathering the right data (because let’s be honest, bad data means bad results). After that, there’s the development and training phase, followed by a thorough round of validation and testing to make sure the model actually delivers.

Once deployed, constant monitoring is non-negotiable; technology doesn’t just run itself. And when the system’s outlived its usefulness or starts causing more headaches than it solves, it’s time for a graceful exit. Proper lifecycle management isn’t just about technical performance. It’s about making sure your AI is ethical, legally sound, and trustworthy in real-world operations. In short, it’s a solid framework for tracking decisions, documenting everything, spotting risks early, and making sure real people are always in the loop – keeping AI safe, accountable, and reliable through every stage.

Why Lifecycle Management Matters

The AI lifecycle involves design, development, training, deployment, monitoring, and retirement of models. Each stage poses potential risks—ranging from biased training data through to model drift after deployment. Mismanaged lifecycles are already resulting in high-profile compliance failures – e.g., Amazon’s AI recruitment tool (bias problems). There is growing demand from regulators and stakeholders for end-to-end accountability for the composition, usage, and maintenance of AI models and hence AI model lifecycle management matters.

Key Trends Shaping the Future of AI Model Lifecycle Management

1. Lifecycle Documentation as a Compliance Mandate

Laws like the EU AI Act (Title III) mandate end-to-end documentation of the AI model lifecycle management, such as:

- Purpose and use case intended

- Sources of training and validation data

- Risk assessments and mitigation plans

- Human oversight methods

- Continuous performance monitoring

Future-proof organizations are making investments in AI compliance platforms to automatically create, store, and audit such artifacts in real-time.

2. Shift from Static to Dynamic Risk Management

AI risks evolve with time. A model that performs well today may introduce new risks tomorrow due to data drift, environmental changes, or misuse. Compliance now requires dynamic model monitoring through:

- Performance degradation alerts

- Drift detection frameworks

- Re-validation triggers upon significant updates or shifts

This dynamic approach aligns with continuous compliance models that regulators are expected to mandate in the coming years.

3. Integration of Human Oversight Across the Lifecycle

Human oversight and to be specific effective human oversight is a necessity. Best practices might include:

- Identified escalation processes for AI decisions

- Role-based review functions (i.e., data steward, risk manager)

- Transparent override process and audit trail

Emerging laws also contemplate “meaningful human participation”, especially in high-risk AI systems that impact fundamental rights or safety.

4. Adoption of AI Compliance Platforms

To address the growing pressure of compliance, companies are now turning to AI Compliance platforms that include:

- Centralized model registries

- Automated documentation

- Risk classification engines (e.g., based on EU AI Act risk tiers)

- Policy-based access and approvals

The platforms provide a single source of truth for regulators, legal teams, and internal actors.

5. Global Harmonization and Interoperability

It is likely the future will see greater harmonization of global AI standards. Organizations should build AI model lifecycles management that will require compliance with:

- EU AI Act (risk classification, conformity assessments)

- OECD AI Principles (transparency, robustness, accountability)

- NIST AI RMF (risk governance functions: Map, Measure, Manage, Govern)

- ISO/IEC 42001 (AI management systems)

This would require a compliance architecture that is modular where organizations can plug and play into the regulatory foundation. Discover how AI is transforming daily living: AI-driven lifecycle management extends the life of household appliances.

Best Practices for Compliance-Centric AI Lifecycle Management

| Lifecycle Phase | Best Practices for Compliance |

| Design & Planning | Conduct use-case impact assessments; define risk level; document intended purpose |

| Data Collection | Ensure data quality, fairness, and representativeness; log data lineage |

| Training & Testing | Apply bias detection tools; maintain experiment records; conduct adversarial testing |

| Deployment | Validate model accuracy and explainability; implement access controls and audit trails |

| Monitoring | Enable drift detection, retraining schedules, and incident reporting channels |

| Retirement | Ensure secure deactivation; preserve historical logs for auditability |

Adeptiv Ai aims to provide organizations with a platform to easily comply with regulations for AI Model Lifecycle Management (MLM) thereby reducing compliance cost for the business and providing them with a chance to contact with our diverse teams having over decade of experience. For more detailed information you can visit our website.

The Business Case for Proactive Compliance

In practicality compliance might look like just another box to check, but it’s actually a smart business move. When you prioritize compliance, you’re building real trust, not just with your consumers but with regulators as well. That’s huge. Plus, you’re sidestepping those nasty fines and the kind of bad press that can tank a brand overnight.

There’s also an efficiency angle here. If your models already meet the standards, you can reuse them, saving serious time and resources. Speed matters. And when it’s time to expand globally? Compliance gives you a smoother path—no getting tripped up by mismatched rules in other countries.

Bottom line: companies that weave compliance into their AI strategy aren’t just covering their bases—they’re setting themselves up for sustainable, ethical growth. It’s not just about avoiding problems; it’s about unlocking opportunities.

Conclusion

The future of AI model lifecycle management will be characterized by proactive governance, risk management, and adherence to regulations. As regulatory scrutiny grows, businesses will need to move from ad hoc AI lifecycle practices to an auditable and organized process. By designing for compliance today, you set yourself up for uninterrupted innovation tomorrow.

We have reached a point where now is the time for AI leaders, compliance officers, and technologists to work collaboratively and put in place lifecycle frameworks to meet not only the present regulatory requirements but also the responsibility requests of the next generation of AI.