Ethical AI is the future, and the time to act is now.

For businesses, adopting ethical AI practices in AI Applications is not just a regulatory requirement; it’s a competitive advantage. By building trustworthy AI systems that align with global standards, companies can enhance customer trust and contribute to the responsible deployment of AI across sectors.

As AI rapidly transforms industries and societies, ensuring its ethical use is more important than ever. In response, the frameworks established by the European Union, Council of Europe, and OECD provide critical guidelines for building AI systems that prioritize human rights, fairness, and transparency.

The European Union’s AI Act

The EU’s AI Act, adopted in 2024, set the stage for Global AI Governance and is a landmark piece of legislation and the world’s first comprehensive legal framework for AI. It aims to regulate AI applications by categorizing them into four risk levels: unacceptable, high, limited, and minimal risks.

Unacceptable Risk AI: AI systems that manipulate human behavior, such as government-led social scoring or facial recognition in public spaces, are banned unless strictly regulated.

High-Risk AI: Applications in sectors like healthcare, transportation, education, and law enforcement must meet strict requirements such as robust data governance, risk assessment, and human oversight.

Limited Risk AI: AI systems that pose limited risks, such as chatbots, must disclose to users when interacting with AI instead of a human.

Minimal Risk AI: The majority of the current applications in the EU, such as spam filters or AI-enabled video games, fall into this category.

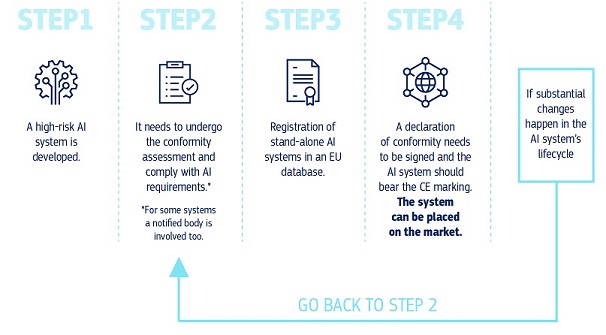

How does it all work in practice for providers of high-risk AI systems?

Image Source: An official website of the European Union

This risk-based approach helps ensure that AI technologies that threaten public safety, rights, and democratic values are subject to stringent requirements or banned altogether.

The Council of Europe’s AI Treaty

The Council of Europe’s AI Treaty, ratified in September 2024, focuses on aligning AI systems with the principles of human rights, democracy, and the rule of law. The Treaty’s focus on international cooperation for regulating AI across borders underscores the growing need for a global AI governance strategy. It complements the EU’s AI Act by addressing ethical concerns surrounding AI development, especially regarding transparency, non-discrimination, and privacy, such as:

Human Rights and Democracy: The Treaty enforces that AI applications must not undermine democratic processes or fundamental human rights. It emphasizes the need for transparency and human agency, ensuring AI systems do not control or mislead users.

Non-discrimination: To prevent biases, AI actors must ensure that AI systems do not perpetuate existing societal inequalities based on gender, race, or socioeconomic background.

Accountability Mechanisms: The Treaty mandates strong accountability frameworks in which AI providers and users are responsible for the ethical outcomes of their AI applications.

OECD AI Recommendations

The OECD’s AI Recommendations, established in 2019 and revised in 2024, are pivotal in guiding global AI governance efforts. These recommendations provide a flexible yet robust framework to foster trustworthy AI by promoting inclusive growth, human rights, and transparency. These recommendations emphasize the importance of a multi-stakeholder approach to AI governance involving governments, private actors, and civil society through five core principles:

1. Inclusive Growth and Well-being: AI should contribute to sustainable development and reduce economic and social inequalities.

2. Respect for Human Rights and the Rule of Law: AI systems must respect the fundamental rights to privacy, freedom of expression, and non-discrimination.

3. Transparency and Explainability: AI systems should provide clear and understandable information about their decision-making processes.

4. Robustness and Security: Ensuring AI systems function reliably under normal and adverse conditions is crucial to avoid harm.

5. Accountability: AI actors should be accountable for AI’s impact on society, including potential biases and misuse.

>> Challenges in Implementing Ethical AI Applications

From a specialized AI consultant’s perspective, despite clear frameworks and growing regulations, implementing ethical AI in AI applications remains challenging for governments and businesses due to a few pressing concerns. These challenges often stem from the complexity of technology, the need for interdisciplinary collaboration, and the dynamic nature of AI advancements. Addressing these issues is essential for ensuring responsible and effective deployment of AI systems.

Balancing Innovation with Regulation: Striking the right balance between fostering innovation and imposing strict regulations is a persistent challenge. AI’s rapid evolution makes it difficult for regulations on AI applications to stay up-to-date, particularly with emerging technologies like generative AI and autonomous AI agents.

Data Privacy and Security: AI systems often rely on vast amounts of data, raising concerns about data privacy and security in AI applications. Ensuring compliance with global privacy standards, such as the GDPR, while fostering innovation is crucial for AI’s ethical adoption.

Addressing Bias and Fairness: Bias in AI Applications, such as hiring or law enforcement, can perpetuate existing societal inequalities. Ensuring diversity in AI datasets and creating mechanisms to identify and mitigate biases is essential for fair AI deployment.

Cross-border Collaboration: AI is inherently global, and lacking uniform standards can lead to regulatory loopholes. As emphasized by the Council of Europe’s Treaty and the OECD’s recommendations, international cooperation is vital to ensure that AI applications are implemented ethically across borders.

As artificial intelligence (AI) becomes more integrated into business operations, public services, and daily life, a comprehensive ethical framework is paramount. Governments and international organizations are shaping AI regulations to foster innovation while ensuring AI systems align with fundamental rights, democratic values, and ethical considerations.