At a Glance

- This post explains what Graph Neural Networks (GNNs) are and why they matter for complex relational data.

- It covers core architecture, key techniques (GCN, GAT, GraphSAGE), and practical applications across industries.

- We discuss AI agents, data governance, explainability, deployment challenges, and steps to build trustworthy graph AI.

- Includes FAQs, a techniques comparison table, infographic guidance, and actionable next steps for practitioners and decision-makers.

What are Graph Neural Networks (GNNs)?

Graph Neural Networks (GNNs) are a class of deep learning models explicitly designed to work on graph-structured data where entities (nodes) and relationships (edges) form non-Euclidean structures. While convolutional neural networks (CNNs) and recurrent models assume grid-like or sequential data, GNNs capture relational topology, edge attributes, and node-level features to learn context-sensitive, multi-hop representations. Graphs are ideal for social networks, molecular structures, knowledge graphs, financial transaction networks, transportation systems, and any domain where relationships carry predictive signal.

How GNNs Work: Message Passing and Representation Learning

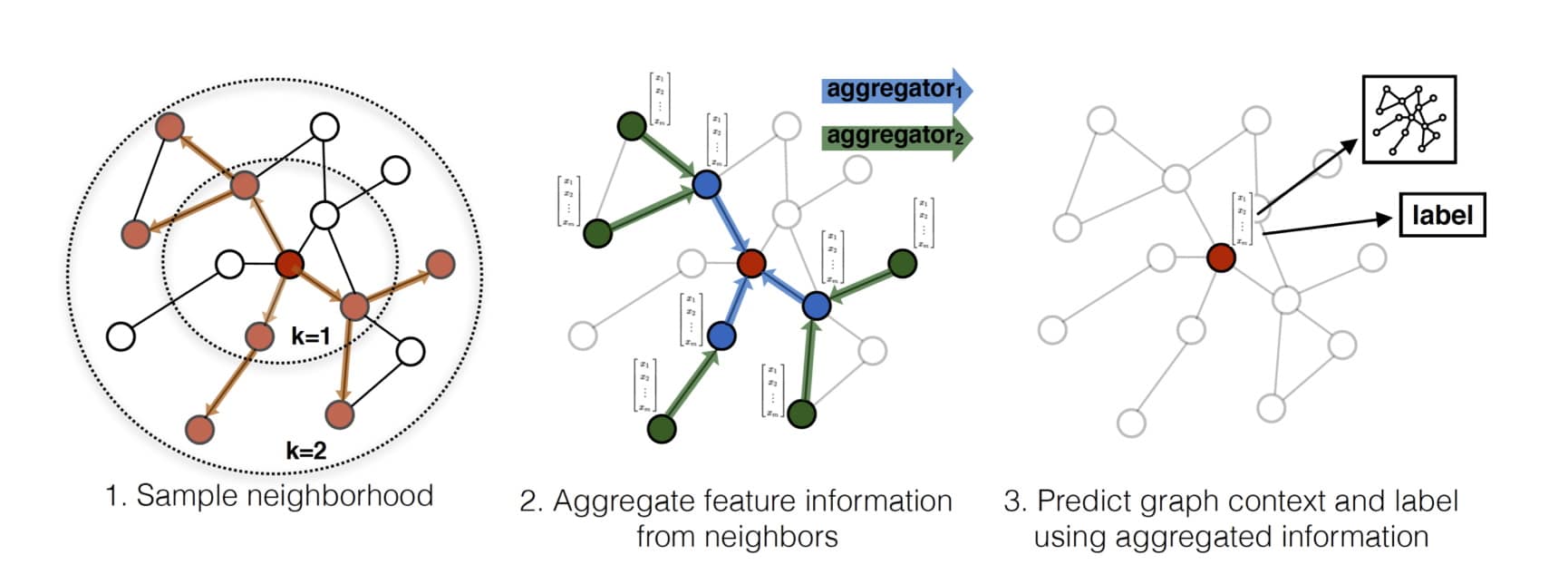

At the core of most GNNs is the message-passing paradigm. Each node holds a representation vector that is iteratively updated by aggregating information from neighbouring nodes and edges. The typical cycle involves:

- Message computation: For each edge, a message is computed from the source node’s embedding and any edge features such as type or weight.

- Aggregation: Incoming messages are aggregated using permutation-invariant functions (sum, mean, max) or attention-based weighted sums to preserve permutation invariance and capture neighborhood context.

- Update: The aggregated message is combined with the node’s previous state via a neural network layer (for example, an MLP or GRU), producing an updated embedding.

- Readout: For graph-level tasks, node embeddings are pooled into a global representation used for classification or regression.

This iterative neighborhood aggregation enables GNNs to model multi-hop dependencies, discover structural motifs, and produce expressive features for downstream tasks.

Key Techniques in GNNs

Graph Convolutional Networks (GCN) apply convolution-like operators to graphs, often using spectral approximations of the graph Laplacian to diffuse features among neighbours. They are powerful for semi-supervised node classification and foundational to many Graph Neural Networks (GNN) pipelines.

Graph Attention Networks (GAT) introduce attention mechanisms to selectively weigh neighbours, allowing the model to emphasize informative relationships and ignore noisy connections. GATs excel where neighbours have varying importance.

GraphSAGE proposes inductive learning; it samples and aggregates neighbourhood information to generate embeddings for previously unseen nodes, making Graph Neural Networks (GNNs) scalable to evolving graphs.

Message Passing Neural Networks (MPNN) formalize the message, aggregate, update loop and generalize numerous variants within one framework. Additional advances include relational GNNs for heterogeneous graphs, positional encodings to capture structural roles, and scalable sampling algorithms for very large graphs.

Types of AI Agents & Their Relation to GNNs

AI agents are autonomous systems that perceive an environment, reason, and act. Types include reactive agents (mapping perceptions directly to actions), deliberative agents (planning ahead), learning agents (adapting through experience), and hybrid agents combining these capabilities. Graph Neural Networks (GNNs) augment agent architectures by modelling relational context as graphs: multi-agent systems can represent intra-agent communication as edges; recommendation agents can use knowledge graphs as structured memory; robotic agents can apply GNNs to model object relationships for manipulation or navigation. In short, Graph Neural Networks (GNNs) enable agents to reason about structure and dependencies, improving coordination, planning, and generalised decision-making across domains.

Applications of GNNs: Industry Use Cases

- Social Networks and Recommendation – Graph Neural Networks (GNNs) model user-item bipartite graphs, enabling personalised recommendations, community detection, and influence propagation analysis. These models capture collaborative filtering signals and context beyond co-occurrence statistics.

- Drug Discovery and Molecular Modelling – Molecules as graphs (atoms as nodes, bonds as edges) allow GNNs to predict properties, suggest modifications, and prioritise candidates for synthesis. This reduces experimental cycles and accelerates discovery.

- Fraud Detection in Finance – Financial fraud is often relational: rings of accounts and transaction paths reveal suspicious patterns. GNNs detect complex fraud rings by leveraging graph topology and relational features beyond individual transaction anomalies.

- Knowledge Graphs & Search – Search engines and AI assistants use GNNs to reason over knowledge graphs, enhance entity linking, and perform semantic retrieval, improving relevance for complex queries.

- Transportation and Infrastructure – Modelling roads, intersections, and signals as graphs, GNNs forecast traffic, optimise routing, and support smart-city planning by capturing spatial-temporal dependencies.

- Cybersecurity – Computer network graphs help detect intrusions and lateral movement by identifying anomalous communication patterns and compromised subgraphs.

Data Governance for GNNs: Definition and Best Practices

Data Governance for graph AI is the structured practice of managing the availability, integrity, usability, and security of graph datasets. Given that graphs encode relational context, governance must ensure provenance, quality, and ethical handling of connections as well as nodes. Essential elements include:

- Data lineage: Maintain complete provenance for node and edge attributes; track transformations and cleaning operations so each feature is traceable.

- Quality controls: Validate connectivity patterns, correct missing or spurious edges, and detect duplicate or fraudulent nodes and links.

- Privacy-preserving techniques: Employ anonymization, edge perturbation, federated learning, and differential privacy to mitigate re-identification risks inherent in relational structures.

- Access controls and encryption: Protect graph snapshots and model artifacts using role-based permissions and strong encryption for storage and transit.

- Ethical oversight: Regularly assess harms from relational inference such as group-level bias amplification, re-identification, or discriminatory propagation effects.

Effective data governance reduces bias, improves reproducibility, and ensures compliance with laws such as GDPR, while aligning with corporate AI governance frameworks and Responsible AI commitments.

Challenges & Limitations of GNNs

GNNs are powerful but not without drawbacks. Scalability is a primary concern: massive graphs with millions or billions of nodes demand sampling methods, partitioning strategies, and distributed training to remain tractable. Explainability remains a research frontier because aggregated neighborhood features can be opaque; techniques such as attention visualization and post-hoc explainers help but do not fully solve interpretability. Data quality and bias are magnified in graphs—biased edges propagate and amplify unfairness. Dynamic graphs that evolve rapidly require incremental learning approaches or streaming architectures to remain current. Finally, deep GNN architectures face over-smoothing, where node embeddings converge and lose discriminative power after many layers.

Practical Considerations for Deployment

Deploying GNNs into production demands operational rigour. Build real-time inference pipelines and implement drift detection not only for feature distributions but for topology changes. Integrate explainability dashboards and automated bias reporting to surface issues early. Establish secure model registries capturing versioning, lineage, and deployment metadata. Design fallback logic and human-in-the-loop controls so high-risk decisions can be paused or escalated. Align deployment practices with your AI governance framework to ensure ethical, auditable outcomes consistent with Responsible AI principles.

Table: GNN Techniques Comparison

| Technique | Strengths | Use Cases |

| GCN | Efficient propagation on homogeneous graphs | Node classification, semi-supervised tasks |

| GAT | Adaptive neighbor weighting via attention | Heterogeneous neighbour importance |

| GraphSAGE | Inductive capability for large graphs | Dynamic graphs, recommender systems |

| MPNN | Generalized message-passing framework | Chemistry, molecular property prediction |

Future Directions

Research is advancing GNN scalability, temporal modeling, and multimodal integration with language models. Pretraining on large graph corpora, combining Transformers with GNN layers, and few-shot transfer learning are promising directions. Practically, industry will push for trustworthy GNNs that meet AI governance, explainability, and Responsible AI standards; seamless integration into enterprise data governance will be a competitive differentiator.

A practical next-step roadmap: begin with an AI inventory and risk classification focused on graph workloads, pilot explainability tools on representative subgraphs, integrate privacy-preserving pipelines, and establish governance KPIs tied to fairness and robustness. Prioritize external audits and continuous monitoring so models remain compliant as graphs evolve. These steps translate GNN research into reliable, enterprise-grade solutions aligned with Responsible AI and AI governance best p… for long-term trustworthy deployment now.

FAQs

1. What is the primary advantage of GNNs over traditional neural networks?

GNNs capture relational structure and multi-hop dependencies, enabling learning from connections not represented in grid-like data.

2. Can GNNs handle dynamic graphs?

Yes—with specialized architectures, incremental update methods, and careful sampling; dynamic graphs often require tailored retraining and monitoring strategies.

3. Are GNNs interpretable?

Interpretability is improving by using attention mechanisms and post-hoc explainers, but full transparency remains an active research challenge.

4. How do I ensure privacy in graph data?

Apply anonymization, edge perturbation, differential privacy, federated training, strict governance, and access controls to mitigate identification risks.

5. Which industries benefit most from GNNs?

Pharmaceuticals, finance, social platforms, cybersecurity, transportation, and knowledge systems gain substantial value from relational modelling.

6. Do GNNs require more compute than CNNs?

Compute demands depend on graph size and density; very large or dense graphs can be more resource-intensive than typical CNN workloads.