At a Glance

- A practical overview of the EU AI Act and its implications for business leaders.

- What a robust AI Governance Framework must address: safety, transparency, accountability.

- Why Responsible AI is more than compliance—it’s strategic advantage.

- Key steps CEOs should prioritize today to align AI deployment with ethical and regulatory standards.

- Concrete guidance on embedding governance, ethics, monitoring and risk-management in your AI lifecycle.

Introduction

Artificial Intelligence (AI) is reshaping industries at an unprecedented pace. From predictive analytics in finance to autonomous systems in healthcare and beyond, the promise of AI is enormous. Yet as adoption accelerates, so do the risks associated with opaque algorithms, bias, and lack of transparency. It’s why the European Union introduced the EU AI Act, marking the world’s first major regulation focused squarely on AI deployment, ethics, and oversight.

For CEOs and senior business leaders, understanding this regulation isn’t merely about compliance—it’s a strategic imperative. Your ability to build, deploy and govern AI ethically will influence your reputation, growth trajectory and competitive edge. This blog delves into what the EU AI Act means for your business, why building an effective AI Governance Framework and practising Responsible AI are essential, and how you should act now.

What is the EU AI Act?

The EU AI Act is a landmark regulation designed to govern AI systems used within the EU—or used by organizations whose outputs impact EU citizens—even if developed elsewhere.

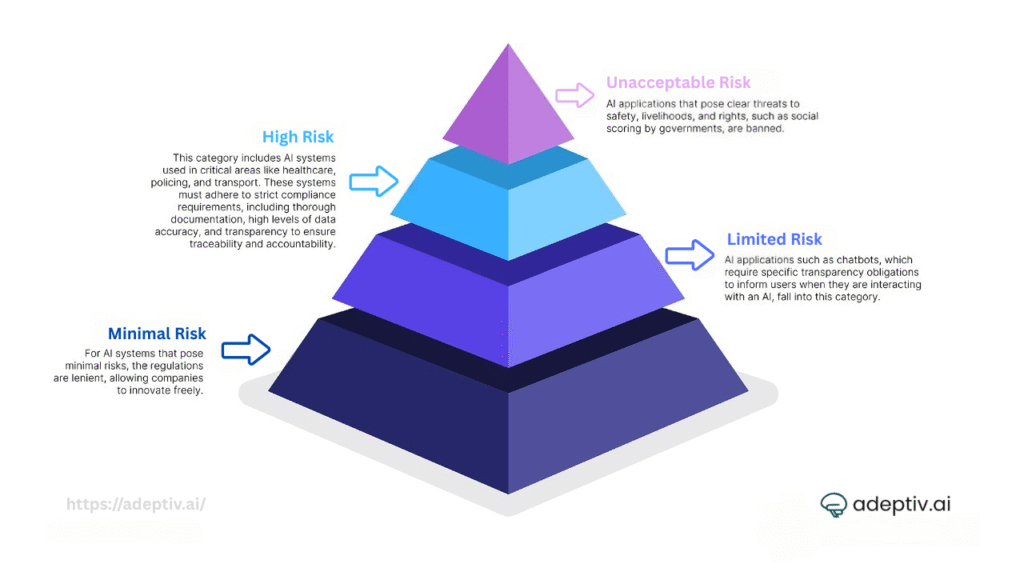

At its core, the Act adopts a risk-based approach, categorising AI applications into four levels:

- Unacceptable Risk – systems that pose severe threats to safety or fundamental rights and are prohibited.

- High Risk – AI used in critical sectors (finance, healthcare, employment, transport) with very stringent obligations.

- Limited Risk – systems such as chatbots or generative AI requiring transparency and disclosure.

- Minimal Risk – low-impact applications; fewer regulatory burdens.

For example, a hiring algorithm that discriminates is likely high risk, while an internal recommendation engine may be minimal risk.

The scope: The Act covers developers, deployers and providers of AI systems. Non-compliance can now mean fines up to €35 million or 7% of worldwide turnover for serious infringements.

Core Pillars: Safety, Transparency & Accountability

1. Safety Requirements for High-Risk AI Systems

To comply, businesses must demonstrate that AI systems are safe and reliable. Duties include:

- “Data governance” for high-quality, unbiased training datasets.

- “Human oversight” mechanisms ensuring critical decisions have human sign-off.

- Documentation, validation and testing frameworks before deployment.

2. Transparency and Documentation

Transparency is non-negotiable. The Act mandates:

- Disclosures when a user interacts with an AI system (“you are speaking to a bot”).

- Traceability of decisions: every model version, dataset used, and output must be logged.

- Auditable design, training and performance records.

3. Accountability and Ethical AI

Legal and ethical standards demand:

- Active governance frameworks enforcing principles such as fairness, security, privacy.

- Mitigation of bias, discrimination or privacy violations.

- Alignment with the EU Charter of Fundamental Rights and GDPR.

Implementing these pillars is foundational for any credible AI Governance Framework.

How the EU AI Act Impacts Business Operations

1. Product Development & Design

AI compliance isn’t an afterthought—it begins in design. Businesses developing AI must integrate the Act’s ethical and regulatory requirements from day one. That means dedicating resources, updating development pipelines, and balancing innovation with oversight.

2. AI Risk Management & Governance

CEOs must ensure comprehensive governance:

- Regular AI audits.

- Risk assessments tailored by classification.

- Cross-functional teams including legal, technical, and ethical experts.

3. Data Privacy & Protection

The EU AI Act works alongside GDPR. Organizations must ensure:

- Proper handling of personal and sensitive data.

- Transparent data-use policies.

- Robust safeguards against data breaches and misuse.

Strategic Opportunities: Compliance & Advantage

While some view regulation as a constraint, the smarter perspective sees it as strategic. By embracing Responsible AI and strong AI Governance, businesses can:

- Build trust and differentiate brand value.

- Access new markets via compliant solutions.

- Inspire investor confidence and accelerate growth.

- Pre-empt regulatory disruption, turning compliance into enabler rather than cost.

Many executives view ethical AI as a market differentiator. A recent survey found nearly half of CEOs consider Responsible AI a key driver of competitive advantage.

What This Means for CEOs: Priority Actions

As CEO, the legal and strategic burden of AI governance rests with you. Here’s what you need to do today:

- Map your AI landscape. Identify all systems—shadow or visible—and classify by risk.

- Appoint accountability. Name a Chief AI Officer or governance lead who answers to the board.

- Embed ethics in every process. Pull Responsible AI into design, deployment and monitoring.

- Audit for compliance. Use external reviews and align to standards such as ISO 42001, NIST AI RMF, and the EU AI Act.

- Monitor continuously. Setup dashboards, logs, alerts—every AI decision needs traceability.

- Communicate openly. Stakeholders, clients and employees must understand how your organization handles AI risk and ethics.

The path forward isn’t just about following rules—it’s about building an organization where AI doesn’t just work, it works right.

Integrating an AI Governance Framework

Here’s a quick blueprint you can adopt:

| Stage | Core Activities |

| Inventory & Classification | Catalogue all AI systems; classify by risk (low, medium, high). |

| Ethic & Policy Layer | Define Responsible AI principles: fairness, transparency, privacy, accountability. |

| Technical Safeguards | Embed explainability, bias tests, data lineage and privacy protection tools. |

| Monitoring & Auditing | Implement logs, model-drift alerts, versioning; external audits. |

| Governance Bodies | Cross-functional AI ethics board, AI governance committee, accountability roles. |

| Compliance Mapping | Map to ISO 42001, NIST AI RMF, EU AI Act, regulatory frameworks. |

| Incident Response | Protocols for failures: rollback, human review, communication, remediation. |

By combining Responsible AI (principles and ethical design) with the structural backbone of an AI Governance Framework, you build resilience and scalability.

Conclusion

The era of unchecked AI experimentation is ending. With the EU AI Act now shaping how AI is developed, deployed and governed, organizations must act decisively. A strong governance foundation paired with ethical design isn’t optional—it’s strategic.

For today’s CEOs, the questions are clear:

- Have you mapped your AI systems and classified their risk level?

- Are your policies, controls and monitoring infrastructure aligned with the new regulation?

- Do you view AI governance not as a cost center but as a lever for trust, value and competitive differentiation?

By answering “yes” to these questions and embedding a robust AI Governance Framework, you position your business to thrive in a regulated, AI-driven future—one that demands compliance, transparency, ethics and innovation in equal measure.

FAQs

Q1. What is the difference between Responsible AI and AI governance?

Responsible AI refers to ethical design and deployment — fairness, transparency, privacy. AI governance is the systems, policies and oversight that operationalize those principles within an organization.

Q2. When does the EU AI Act become enforceable?

Q3. What are the key penalties for non-compliance?

Violations can incur fines up to €35 million or 7% of worldwide turnover, especially for prohibited practices or high-risk systems.

Q4. How can CEOs prepare their organizations?

CEOs should prioritise an AI inventory, designate accountable leadership, embed ethical controls in design, ensure continuous monitoring, and align to standards such as ISO 42001 and NIST AI RMF.

Q5. Is compliance with the EU AI Act just a European concern?

No. Any organisation whose AI system impacts EU citizens falls under the scope, even if developed outside the EU. It’s a de-facto global standard.