At a Glance

- AI is transforming industries — but compliance and ethical governance are becoming non-negotiable.

- Global regulations like the EU AI Act, NIST AI RMF, and ISO 42001 are reshaping how AI models are developed and maintained.

- Future-ready organizations are embedding compliance, transparency, and human oversight into every stage of the AI Model Lifecycle Management.

- This blog explores how businesses can design AI governance frameworks to stay compliant, ethical, and scalable in the age of Responsible AI.

The Compliance Imperative in the AI Era

Artificial Intelligence is no longer a supporting technology—it’s the core of modern enterprise strategy. Yet, as adoption scales, so do risks related to bias, accountability, and regulatory non-compliance. Governments across the world—from the European Union’s AI Act to the Texas Responsible AI Governance Act (2025)—are enforcing strict oversight of AI systems that affect human lives, economic fairness, or safety.

For organizations, this means one thing: the era of “move fast and break things” is over. The new imperative is govern with care and prove compliance.

Effective AI Model Lifecycle Management (MLM) is now the backbone of that compliance effort—enabling organizations to monitor, document, and audit every stage of model development, deployment, and retirement.

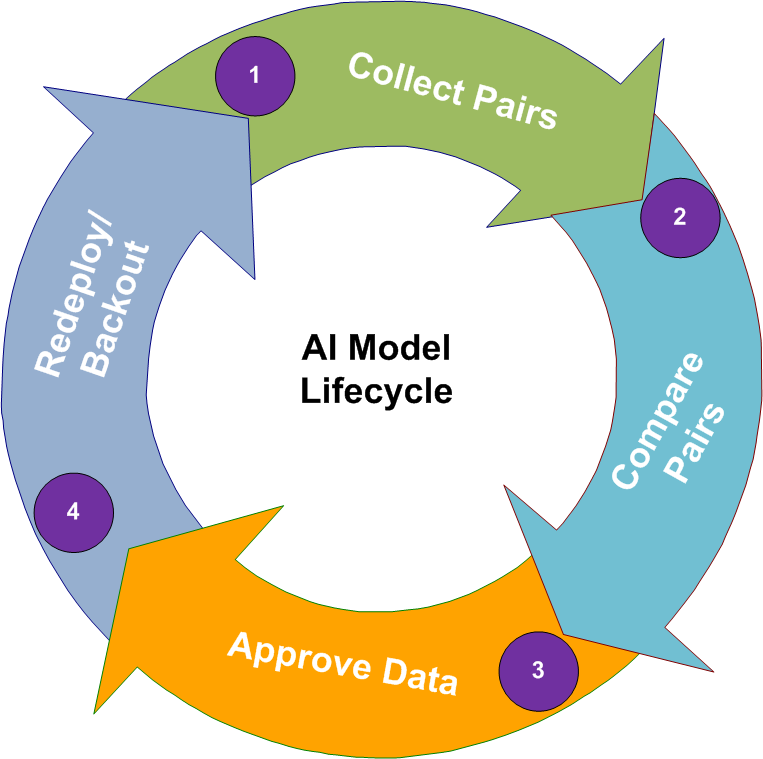

What is AI Model Lifecycle Management?

AI Model Lifecycle Management (MLM) refers to the structured oversight of AI systems from initial design to final decommissioning. It ensures that every model developed aligns with ethical, legal, and operational standards.

The lifecycle typically includes:

- Strategy & Design: Define the purpose, ethical considerations, and expected impact.

- Data Collection: Ensure fairness, representativeness, and quality of data sources.

- Development & Training: Build models using transparent methodologies and document experiments.

- Validation & Testing: Test for bias, explainability, and robustness.

- Deployment: Implement with access controls, monitoring, and audit trails.

- Monitoring & Maintenance: Detect model drift, retrain when necessary, and maintain version control.

- Retirement: Securely deactivate outdated models and preserve documentation for audits.

This process isn’t just a technical checklist—it’s the foundation of trustworthy and Responsible AI.

Why Lifecycle Management Matters More Than Ever

Poorly managed AI lifecycles can lead to bias, privacy violations, and reputational harm. Consider Amazon’s AI recruiting tool, which was scrapped after it learned gender bias from historical data. Or Clearview AI, which faced multiple lawsuits for non-consensual facial data collection.

Regulators are responding swiftly. Under the EU AI Act, non-compliance penalties can reach €35 million or 7% of annual global turnover—whichever is higher.

The message is clear: governance failures are no longer just ethical lapses; they’re financial liabilities.

Key Trends Shaping the Future of AI Model Lifecycle Management

1. Lifecycle Documentation as a Compliance Mandate

The EU AI Act’s Title III mandates comprehensive documentation of the AI lifecycle, including:

- Intended purpose and scope of use

- Data sources and validation methods

- Risk assessment and mitigation plans

- Human oversight mechanisms

- Ongoing performance and impact evaluations

Forward-thinking organizations are investing in AI compliance automation platforms that generate, store, and update documentation in real time—creating audit trails that regulators trust.

2. Shift from Static to Dynamic Risk Management

AI models don’t stay static—data drifts, user behavior evolves, and risks change.

Dynamic risk management includes:

- Automated drift detection systems that alert teams when model outputs deviate from expected behavior.

- Continuous re-validation of AI systems after significant updates or environmental shifts.

- Real-time compliance dashboards integrating risk signals with governance frameworks.

Regulators increasingly expect continuous assurance, not one-time certifications.

3. Integration of Human Oversight Across the Lifecycle

Even the most advanced AI systems require meaningful human oversight. Future-ready governance frameworks embed human decision checkpoints at critical junctures:

- Escalation protocols for high-risk decisions

- Role-based approvals for sensitive models

- Human-in-the-loop validation before deployment

This aligns with global frameworks like NIST AI RMF, which emphasizes “Govern, Map, Measure, Manage” as a cycle of continuous human-led oversight.

4. Adoption of AI Compliance Platforms

Enterprises are increasingly centralizing governance through AI Compliance Platforms that include:

- Unified model registries

- Automated documentation pipelines

- Risk classification engines (aligned with EU AI Act risk tiers)

- Policy-driven access and version controls

These tools not only support compliance but also streamline collaboration between legal, data science, and ethics teams—turning governance into a shared operational layer rather than a siloed burden.

5. Global Harmonization & Interoperability

As AI regulations mature, the future lies in cross-border harmonization.

To stay agile, organizations must align their AI systems with:

- EU AI Act: Risk classification and conformity assessments

- OECD Principles: Transparency and accountability

- ISO/IEC 42001: AI management systems

- NIST AI RMF: Risk governance functions

The smartest organizations are already building modular compliance architectures that can adapt to evolving jurisdictions without rebuilding from scratch.

Best Practices for Compliance-Centric AI Lifecycle Management

| Lifecycle Phase | Best Practice for Compliance |

| Design & Planning | Conduct impact assessments, define intended use, assess risk level |

| Data Collection | Maintain data quality, fairness, and representativeness; record lineage |

| Training & Testing | Run bias audits, maintain experiment logs, conduct adversarial testing |

| Deployment | Validate accuracy, enable access controls, and maintain audit trails |

| Monitoring | Set up drift detection, retraining pipelines, and incident response plans |

| Retirement | Secure deactivation, retain records for auditability |

By following this structure, organizations can build a resilient AI Governance Framework that is both scalable and compliant by design.

The Business Case for Proactive Compliance

Treating compliance as an afterthought is costly—but making it part of your design process can be a strategic advantage.

- Trust & Transparency: Consumers and partners are more likely to engage with brands that show ethical AI practices.

- Operational Efficiency: Reusable, compliant models reduce rework and accelerate deployment.

- Market Expansion: Globally compliant systems simplify entry into new markets with stricter laws.

In other words, compliance is not a constraint—it’s a competitive differentiator.

Conclusion: Designing for Tomorrow’s AI Ecosystem

The future of AI Model Lifecycle Management will be defined by proactive governance, traceability, and transparency. Organizations that invest today in structured frameworks for monitoring, auditing, and ethical oversight will lead tomorrow’s AI economy.

Adeptiv AI empowers enterprises to operationalize AI Governance and Responsible AI across the model lifecycle — mapping policies, controls, and risks to global standards like ISO 42001 and the EU AI Act. With Adeptiv, organizations can innovate responsibly and stay audit-ready by design.

The bottom line: responsible innovation is sustainable innovation. Those who embed compliance early will be the ones shaping AI’s future—ethically, legally, and intelligently.

FAQs

1. What is AI Model Lifecycle Management?

AI Model Lifecycle Management is the end-to-end process of designing, developing, deploying, monitoring, and retiring AI systems with accountability, ethics, and compliance at every step.

2. Why is AI compliance critical for organizations?

Non-compliance can lead to multimillion-dollar fines, legal risks, and brand damage. Compliance ensures trust, transparency, and operational resilience.

3. How does the EU AI Act impact AI lifecycle management?

It mandates lifecycle documentation, continuous monitoring, and risk-based classification—making proactive governance essential.

4. What role does human oversight play in AI governance?

Human oversight ensures accountability and transparency, especially in high-risk or safety-critical applications where automated decisions impact lives.

5. How can companies implement AI Governance frameworks effectively?

Adopt structured processes, leverage AI governance tools, and align with international standards like ISO 42001 and NIST AI RMF for scalable compliance.